Prometheus概述

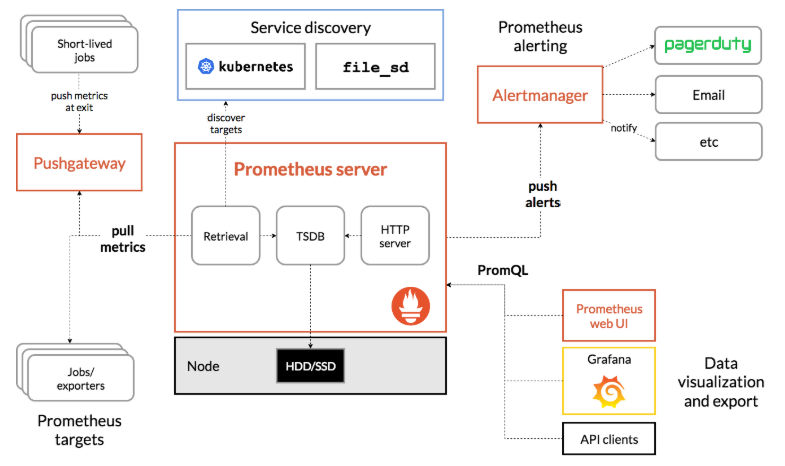

Prometheus是一个开源的服务监控系统和时序数据库,其提供了通用的数据模型和快捷数据采集、存储和查询接口。它的核心组件Prometheus服务器定期从静态配置的监控目标或者基于服务发现自动配置的目标中进行拉取数据,新拉取到的数据大于配置的内存缓存区时,数据就会持久化到存储设备当中。

Prometheus部署

- 下载

1 | git clone https://github.com/iKubernetes/k8s-prom.git |

- 部署node_exporter

1 | cd k8s-prom |

1 | apiVersion: apps/v1 |

- 部署Prometheus-server

1 | cat prometheus-rbac.yaml |

1 | [root@k8s-master01 prometheus]# cat prometheus-cfg.yaml |

1 | [root@k8s-master01 prometheus]# cat prometheus-pvc.yaml |

1 | [root@k8s-master01 prometheus]# cat prometheus-deploy.yaml |

- 部署kube-state-metrics

1 | [root@k8s-master01 kube-state-metrics]# cat kube-state-metrics-rbac.yaml |

1 | [root@k8s-master01 kube-state-metrics]# cat kube-state-metrics-deploy.yaml |

- 制作证书

1 | [root@k8s-master pki]# (umask 077; openssl genrsa -out serving.key 2048) |

- 部署k8s-prometheus-adapter

1 | # 这里自带的custom-metrics-apiserver-deployment.yaml和custom-metrics-config-map.yaml有点问题,需要下载k8s-prometheus-adapter项目中的这2个文件,替换原文件 |

1 | [root@k8s-master k8s-prom]# kubectl get pods -n prom |

Grafana数据展示

- 部署grafana

1 | [root@k8s-master01 grafana]# cat grafana-cfg.yaml |

1 | [root@k8s-master01 grafana]# cat grafana-dp.yaml |