etcd备份

本例使用kubeadm安装k8s集群,采用镜像pod方式部署的etcd,所以操作etcd需要使用etcd镜像提供的etcdctl工具。如果非镜像方式部署etcd则可直接使用etcdctl命令备份。

- 查看etcd配置参数文件

1 | # 默认etcd使用的是host-network网络,然后把系统参数和数据都映射到了宿主机目录。 |

- 访问etcd服务

1 | kubectl describe -n kube-system pod etcd-master |

- 备份

1 | ETCDCTL_API=3 etcdctl --endpoints https://127.0.0.1:2379 snapshot save "/home/supermap/k8s-backup/data/etcd-snapshot/$(date +%Y%m%d_%H%M%S)_snapshot.db" |

velero备份迁移k8s

安装velero

1 | # 下载最新版 |

安装minio

1 | # 上面下载的velero里含有minio部署 |

- 登录minio

1 | http://45.192.x.x:30100/minio/velero/ |

- 创建密钥

1 | cat > credentials-velero <<EOF |

- 安装velero

1 | velero install \ |

1 | [root@master velero-v1.4.2-linux-amd64]# kubectl get pod -n velero |

备份演练

- 创建测试资源

1 | # velero里已准备好测试demo |

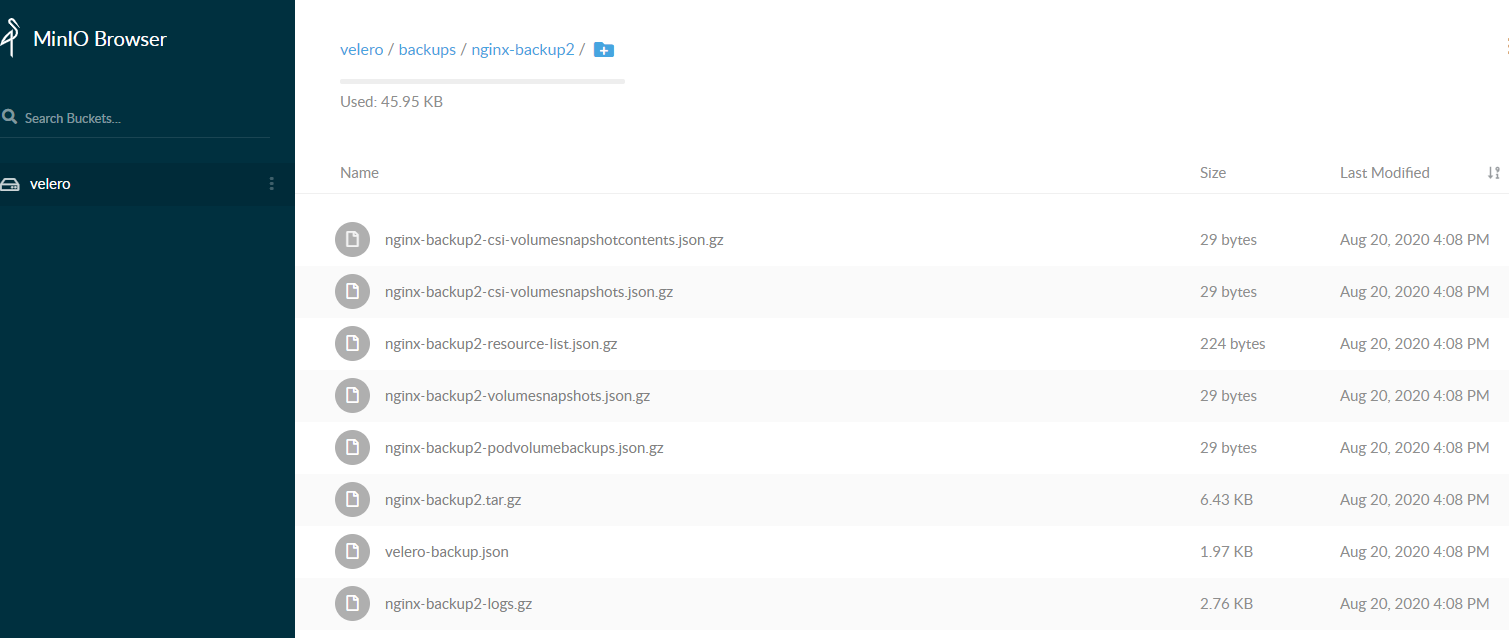

- 创建备份

1 | velero backup create nginx-backup --include-namespaces nginx-example |

- 查看备份

1 | [root@master examples]# velero backup get |

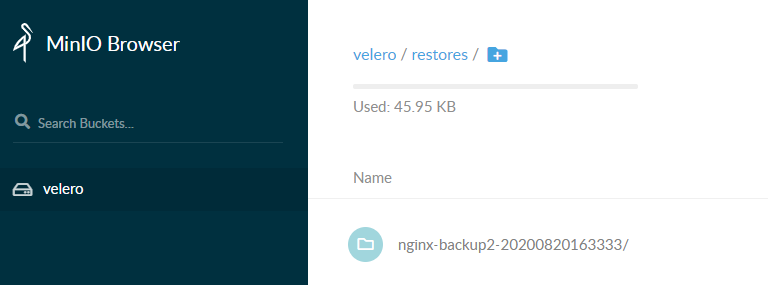

- 恢复测试

1 | # 直接删除namespace |

- 相关命令

1 | velero backup get #备份查看 |

1 | velero create backup NAME |

- 定期备份

1 | # 每日1点进行备份 |

利用velero备份到阿里云oss

- 准备好oss的bucket和相关api密钥

1 | bucket建议创建为低频存储,省钱 |

- 下载velero插件

1 | git clone https://github.com/AliyunContainerService/velero-plugin |

- 修改配置文件

1 | 修改 install/credentials-velero文件,将新建用户中获得的 `AccessKeyID` 和 `AccessKeySecret`填入 |

1 | # vim install/01-velero.yaml |

- 创建ns以及secret

1 | kubectl create namespace velero |

- 创建资源

1 | # 部署velero |

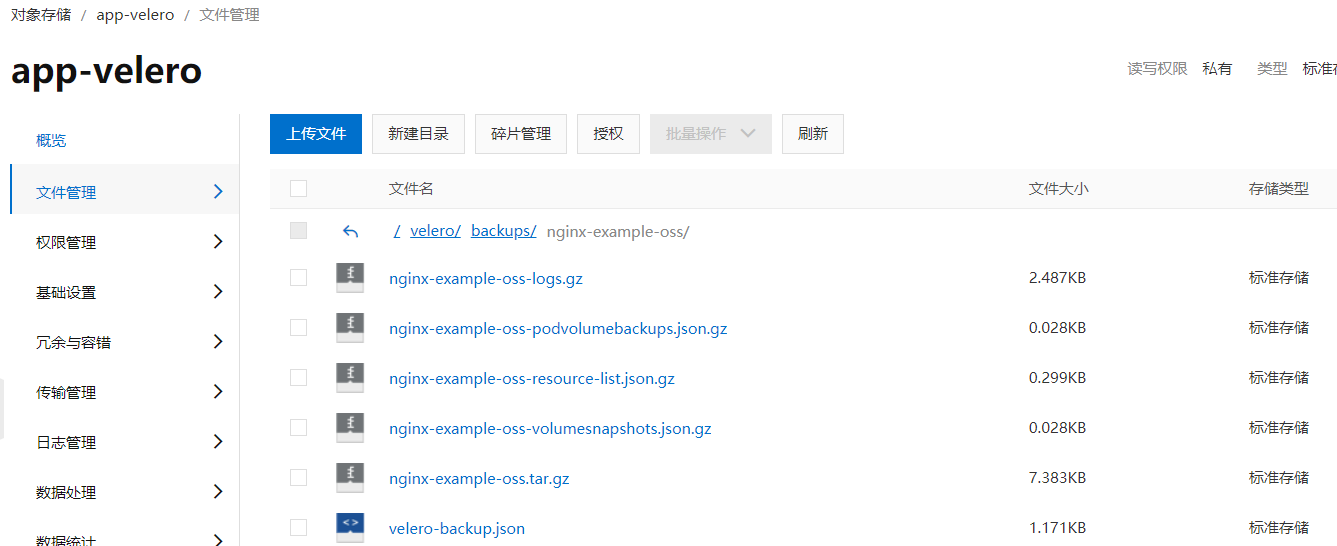

- 备份及恢复

1 | velero backup create nginx-example-oss --include-namespaces nginx-example |

- 注意事项

1 | 1、在velero备份的时候,备份过程中创建的对象是不会被备份的。 |