消费者

1 | # celery_task1.py |

生产者

1 | # produce_task.py |

执行

1 | celery worker -A celery_task1 -l info -P eventlet |

1 | 先命令行celery worker xxx启动监听 |

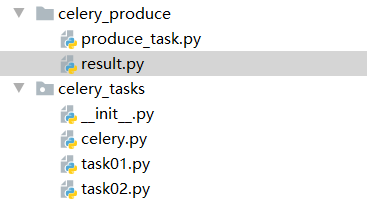

多任务结构

1 | # celery.py |

1 | # task01.py |

1 | # produce_task.py |

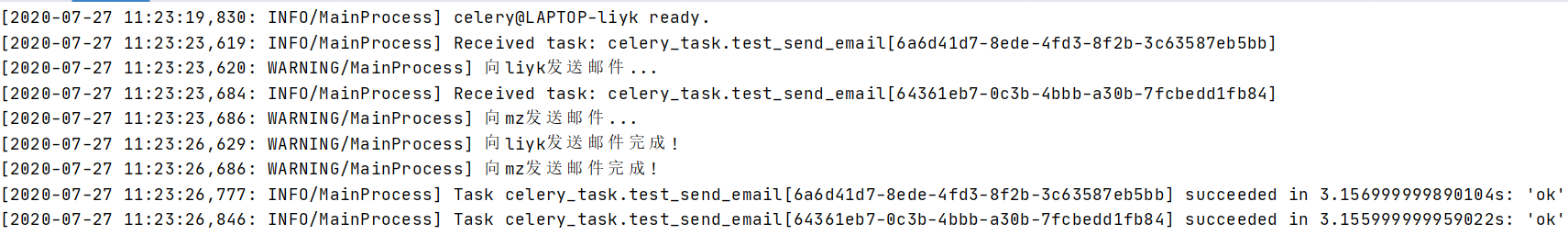

执行

1 | # 在celery_tasks目录外执行 |

celery执行定时任务

1 | # 设置时间让celery执行一个定时任务 |

1 | # 多任务结构中celery.py |